Recently, the C-STAR group, led by Dr. Greg Hickok, published an article in the journal Cortex. The article is titled, “Neural networks supporting audiovisual integration for speech: A large-scale lesion study.” This was the first study that included information from a large number of participants who had experienced a stroke and also completed an audio-visual task. A link to the abstract can be found here. Below is a summary of the article.

Background

During conversation, looking at your conversation partner can enhance how the spoken message is perceived. For example, studies have shown that in noisy environments, being able to see a speaker while they are talking can help you perceive what they are saying. Combining the information from the spoken message, with the visual information from a speaker’s face, is called audiovisual integration. When visual information helps perception, this is called the audiovisual advantage. However, there are times that visual information can affect what we think we hear, and I’ll explain that more in the Study Procedures section.

Several studies have investigated the parts of the brain responsible for audiovisual integration. However, no study has looked at this in a large number of stroke survivors who may have difficulty with audiovisual integration. Therefore, the purpose of this study was to identify how brain damage affects audiovisual integration. Identifying how damage from the stroke affects audiovisual integration will tell us which parts of the brain are related to audiovisual integration.

Study Procedures

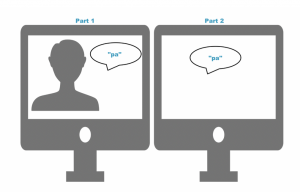

100 participants participated in this study. Participants did two things (illustrated below):

- Watched a speaker say the sounds “pa” and “ka” (audiovisual condition)

- Listened to a speaker say the sounds “pa” and “ka” (auditory condition)

In each case, the participant had to say what sound they thought the speaker said.

In some of the audiovisual conditions, the speaker’s mouth movements did not match what he said. In these cases, people often think they heard the “ta” sound, even though the speaker didn’t say “ta.” This is called the McGurk Effect. The McGurk Effect is a good example of how visual information can affect what we think we hear. This short video explains the McGurk Effect: https://www.youtube.com/watch?v=jtsfidRq2tw

To understand which parts of the brain process audio, visual, and audiovisual information, we looked at how accurate participants were in identifying each sound, and compared this accuracy to patterns of brain damage caused by stroke. We were specifically interested in the following:

- How adding either matching visual information or mismatching visual information (e.g., McGurk trials) affected perception

- How perception of matching and mismatching information was represented in the brain.

Results

The results of the study showed that adding a visual component improved perception for the “pa” sound only. When comparing performance on the audiovisual tasks to patterns of brain damage, we found that areas responsible for visual and auditory processes were important for perception, as well as multimodal areas of the brain.

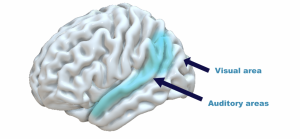

These results emphasize that there are specific areas in the brain that integrate both auditory and visual information. Importantly, damage to these areas can impair audiovisual integration either by affecting the auditory message or visual input. See the figure below for more details.

The figure above indicates the location of auditory and visual areas of the brain. The blue areas indicate portions of the brain that appear to be important for audiovisual integration.

The figure above indicates the location of auditory and visual areas of the brain. The blue areas indicate portions of the brain that appear to be important for audiovisual integration.