My passion for understanding the mechanisms of how things work and why they break down started from the early childhood years. My generation grew up with the advent of electronic technologies, from remote controller cars to video tape players and Walkman! I remember I was never interested to play much with the remote controller cars or watch cartoon shows with the video player, but have always been curious to find out how things work inside those devices and who made them work with such a flawless precision. This passion developed into studying electrical engineering in college and later on pursuing a master degree in biomedical engineering back in 2003. At that time, I attended many lectures, but among all, there was one given by one of my professors who made me wonder about the importance of developing signal processing approaches for studying human biological systems:

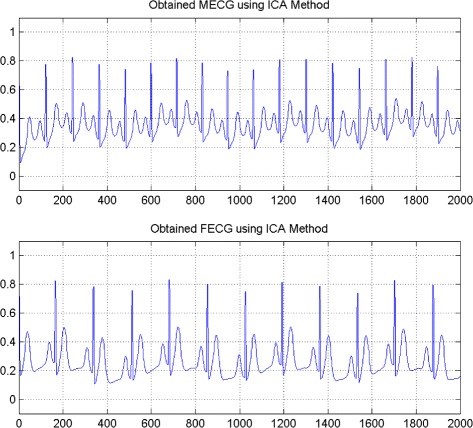

“Imagine we would want to solve the following problem: a cardiologist has recorded the heart beat signal (called ECG: Electro-Cardio-Gram) from a pregnant woman who is suspected to carry a baby with a specific cardiac disorder. Our goal is to process this ECG signal to obtain the baby’s heart beat rhythms and use it to determine if the beating pattern resembles the pattern of a normal heart or there is any sign of abnormal muscle activity that can prompt follow up studies. The challenge with solving such a problem is that we are dealing with a mixture signal, which is the sum of the mother’s heart beat at a much larger amplitude (i.e. bigger heart muscle size) plus the baby’s heart beat at a much smaller amplitude. This creates a challenge for achieving our goal to solve this problem because the baby’s real ECG signal is overshadowed by the mother’s heart beats making it difficult for us to make accurate observation for any potential clinical diagnosis and follow up treatment procedures (for an example, see Fig. 1 and 2 below from a paper by Ghazdali et al. 2015)”.

| Figures adapted from Ghazdali et al., Theoretical Biology and Medicine Modeling (2015) | |

For me, this intriguing question was the driving force to start an exciting path in life: learning more about signals and using them for studying human biological mechanisms. During that time, I started to work on a project in my master thesis adviser’s lab that aimed to process voice signals from people with laryngeal disorders. Again the concept was the same: can we identify specific features in a person’s voice signal to determine the type of damage to the vocal fold muscles? In other words, do the abnormal patterns of vocal folds vibration induce changes in a person’s acoustic voice signals that can be detected for making clinical diagnosis and treatment decisions? (You see the analogy between the ECG signal and heart beats here?). After graduating from the master program, I was lucky enough to be admitted as a doctoral student in Chuck Larson’s lab at Northwestern University in 2006. Chuck is an internationally recognized and prestigious researcher in voice physiology who is among one of the first people to start studying the behavioral and neurobiological mechanisms of vocal motor control in non-human primates and humans. In his lab, Chuck and his colleagues used a novel experimental paradigm, called the altered auditory feedback (or AAF), to study how the brain uses sensory feedback information for regulating different aspects of our vocal production and speech. A classic example of this effect is demonstrated in Lombard effect, which basically explains why you speak with a loud voice when your online auditory feedback is blocked (or altered) by a headphone playing loud music in your ears. The principles of using sensory feedback for controlling our movements share some common principles and perhaps neural circuitry while we control the movement of different parts of our body for a variety of tasks: from producing speech or singing to playing tennis, driving a car, or riding a bike. In order to dig into the underlying mechanisms of human vocal production, Chuck and I began recording neural signals using Electro-Encephalo-Gram (EEG) to measure brain activity from different regions while human subjects performed voice motor control tasks under AAF when their feedback pitch frequency (i.e. the musical note we use for our voice during speaking) was manipulated using real-time electronic devices.

In 2014, I joined the Department of Communication Sciences and Disorders at University of South Carolina as an assistant professor and started collaborating with Julius Fridriksson and his colleagues in the Aphasia Lab and the Center for the Study of Aphasia Recovery (C-STAR) to study the neurobiological factors that predict vocal motor dysfunction in individuals who suffer from post-stroke speech and language impairments. In this new position, I started setting up my Speech Neuroscience Lab to develop methodologies and provide student training to conduct research on the neurobiological origins of voice and speech motor disorder in neurological populations including those with post-stroke aphasia and Parkinson’s disease. This exciting line of research work brings back memories from my early childhood when I was curious to find out how things work, what happens when they break down, and what can be done to fix them. The main concept has remained the same but there is a stark contrast here and that is instead of understanding how man-made toys work, our research work is focused on studying the mechanisms of a complex system that is not developed by humans, which is the ability to produce speech. Perhaps, this ability is one of the most fundamental functional mechanisms developed in the human brain that differentiates us from other animal species and it serves as the foundation to our human cognitive ability, power of imagination, creativity, social communication, and many more.

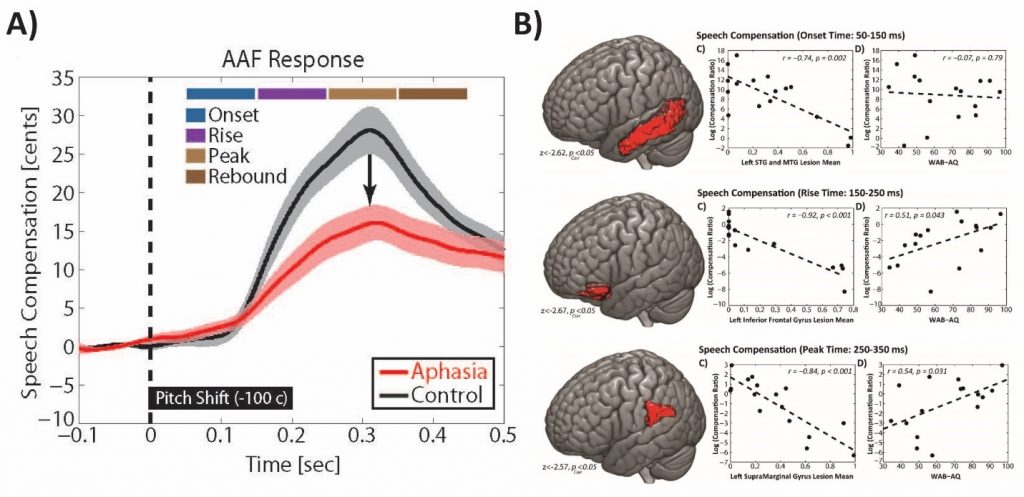

One of the most exciting line of current work in our lab is to develop signal processing pipelines to map human behavior for speech motor control onto brain networks that mediate this function. For doing this, we collect simultaneous speech and EEG signals from adults with no history of neurological conditions and compare their data with those from individuals with history of post-stroke aphasia. This side-by-side comparison allows us to identify speech features and neural activity markers that reflect deficits of speech in the latter group. More interestingly, we aim to combine these data with Magnetic Resonance Imaging (MRI) data in stroke individuals to examine damage to anatomical brain structures that predict speech impairment. In a published study from our lab (Behroozmand et al., 2018), we identified three key anatomical brain areas that showed direct relationship with speech motor disorder in post-stroke aphasia. Our data showed that, in-spite-of individual variability, there was a general pattern of diminished ability to use auditory feedback information for controlling the pitch frequency of speech vowel sound production (e.g., saying /aaaah/) in stroke compared with neurotypical adults. Our data also showed that this pattern of speech motor disorder was strongly related to damage to three major anatomical brain areas in the left hemisphere: the prefrontal cortex, an area responsible for planning and production of speech muscle movements, the temporal cortex, an area responsible for processing incoming speech sounds during speaking, and the parietal cortex, an area responsible for linking the speech sound errors to speech movement commands that help us correct for our speech production errors (for an example, see Fig. 3 below).

Fig. 3. A) Profile of speech responses to auditory feedback errors demonstrating diminished ability for speech error correction in post-stroke aphasia. B) Brain anatomical network damage in the temporal cortex (top), prefrontal cortex (middle), and parietal cortex (bottom) showing strong relationship with speech motor disorder in aphasia (figures adapted from Behroozmand et al., Neuroimage, 2018).

These early findings show a promising path toward understanding the neurobiological origins of speech production; however, our knowledge in this field is relatively young. We are excited to continue our work with our collaborators to further advance the understanding about the underlying functional mechanisms of this complex and intriguing system. The ultimate goal for advancing this knowledge is not only to understand how the system works, but also to figure out why it may not work properly in some people and how it can be treated clinically. This is an exciting path for contributing to the field of communication disorder and speech pathology and technological and scientific breakthroughs in the future will be key for accomplishing our goals for providing low-cost and effective treatment procedures.